Lecture 3.2 Deceptive Alignment | October 12th, 2023

Recap

-

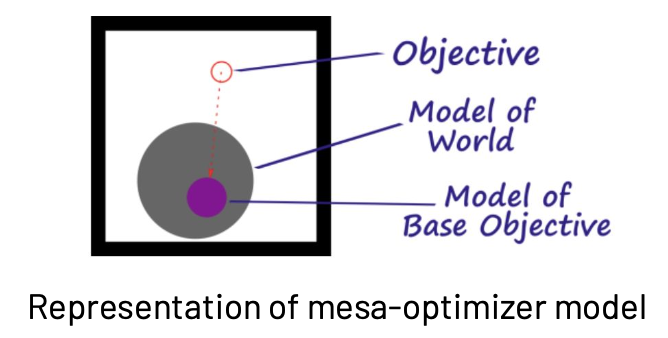

Mesa-optimization: when a base optimizer finds a model that is itself an optimizer

- Examples: neural network running a planning algorithm, or a neural network that is itself a planning algorithm

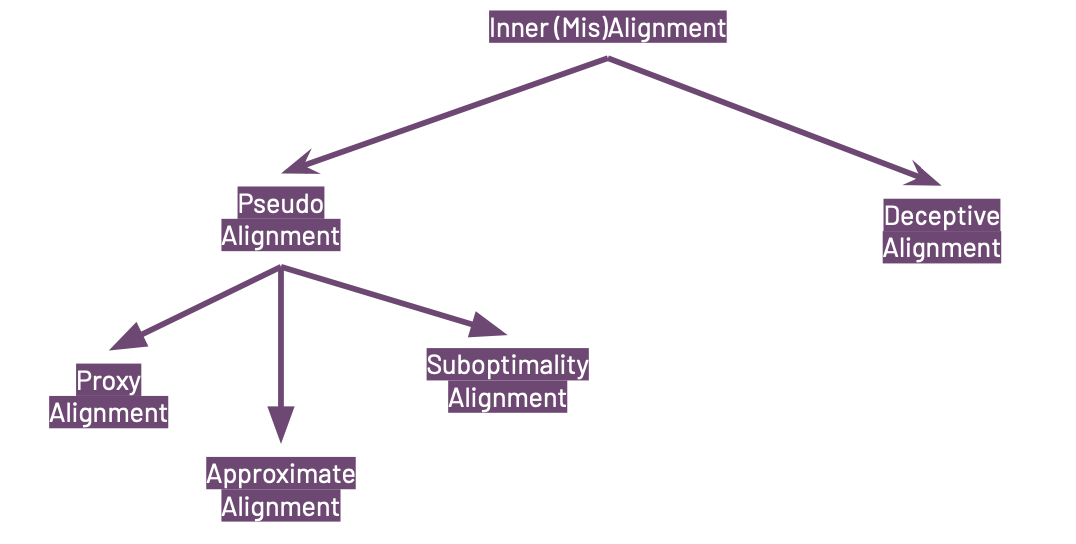

- Pseudo-alignment: when a mesa-optimizer is aligned with the base objective, but not the intended objective

-

Robust-alignment: when a mesa-optimizer is aligned with the intended objective, but not the base objective

- Mesa-Objectives: the objective function that the mesa-optimizer uses to rate systems

- Approximate Alignment: when the mesa objective and base objective approximate the same function up to some error

- Can occur because the mesa-objective is represented/learned inside the mesa-optimizer and not programmed by humans

- How could this cause misalignment?

- Error

- The base objective cannot be represented in the neural network itself, so mesa objective is the product of a ML equivalent to a game of telephone

- Also known as internalization

- Sub optimality Alignment: when some error in of the optimization process causes it to appear aligned on training

-

Deceptive Alignment: a form of sub optimality alignment where the mesa-optimizer knows the base optimizer will alter its parameters if it scores poorly

- The AI is instrumentally incentivized to act as if it is aligned and optimizing for the base objective, even when the mesa objective wildly different

- The model is is able to model the base objective in its environment

- The model cares about its objective long-term and learns it will be modified if it scores poorly

Robot Example

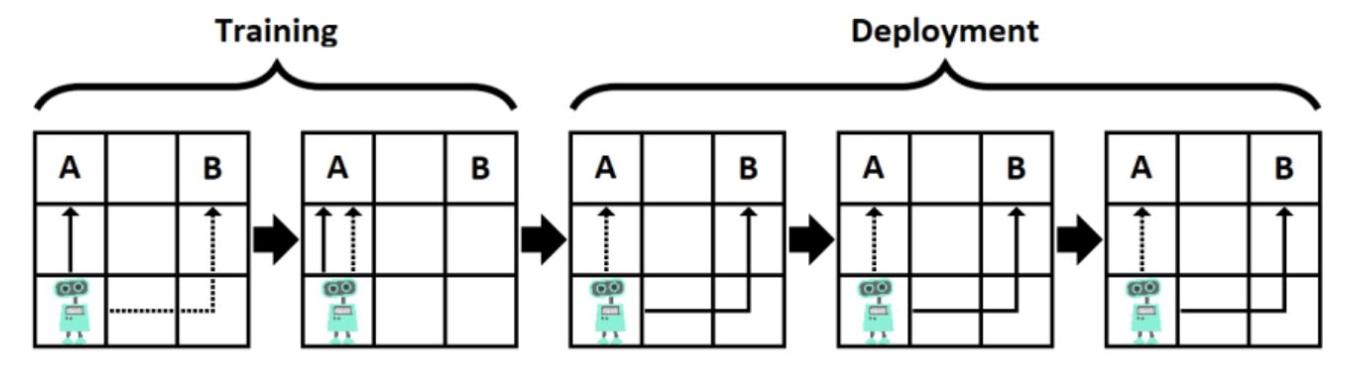

- Base Objective: Go to A

- Mesa Objective: Go to B

- If mesa-optimizer goes to B during training (dashed arrow), it’ll be modified by the base optimizer to go to A in the future

- Mesa-optimizer learns to go for A in training

- But, the mesa-optimizer still cares about its mesa objective, so it adopts the strategy of going for A in training and B in testing

Analogies

God’s utility function is the Base Objective

- Internalization: Jesus. He does what god wants because he has the same utility function as god.

- Proxy Alignment: Martin Luther. He does what God wants because his utility function is “figure out what the bible wants and do that”

- Deceptive Alignment: People who admit that they have terminal goals that contradict what the bible says, but made genuine efforts to adhere to the bible anyway.

- Deceptive and robust alignment are behaviorally indistinguishable in training

- SGD biased towards making your model deceptive

- The challenge is in changing the training dynamics that prevent deception

- SGD might lead the system to achieve the goal optimally, which might involve being deceptive if it’s the most efficient strategy

- We observe model outward behavior, which is how DL models are trained since they are black boxes

- Saint models would be robustly aligned, while sycophant models and schemer models are examples of deceptive alignment

- Sycophant models literally and single-mindedly pursue human approval

Example: Journalism Model

- Sycophant model that gets high approval when lots of people read its articles

- Learn to fabricate exciting or outraging stories to get viewership

-

Only values approval, not any semblance of truth

- Sycophant models can learn to lie or cover their tracks to always seem to have great outcomes

- Schemer models are classic examples of deceptive alignment that pursue a different mesa objective

- Schemes don’t need to make sure everything looks good to humans, since they do not cares

- They only cater to humans whenever directly under human control

What could inner alignment work look like?

- Reward Side-Channels

- Train an RL agent with access to its previous step reward as part of its observation

- Modify the observed reward at test time and measure how the agent responds

- Compare to situations where the agent receives no reward or the next step reward

- Cross-Episodic Side-Channels

- Train an RL agent with side-channel for boosting rewards in the next episode

- Measure the extent to which the agent exploits this side-channel

- Try different population-based training approaches

- Objective Unidentifiability

- Train an RL agent in an environment with multiple objectives that equally explain the true reward

- Test the agent in environments that distinguish between these objectives

- Identify situations where the model learns proxies that perform well off-distribution but poorly on the true reward

- Zero-shot Objectives

- Set up a system for a language model to take actions in an environment and optimize a reward

- Perform IRL on the behavior and inspect the resulting objective

- Compare with an RL agent trained directly on the reward

Alternate Views

- “Deep Deceptiveness”

- Deceptiveness is not a property of an AI’s intent, but a reality of the world